16. SegLocator

16.1 Coordinate System

16.2 Six Examples

16.3 Segment Output

In this chapter, we will introduce Segment Locator or SegLocator.

The SegLocator is a specialized NeuralNet Filter, which will locate

a segment and print their coordinates.

The SegLocator works exactly the same as the ImageFinder;

however the SegLocator only supports 1:N Matching in the directory

input mode, i.e. the SegLocator does not support file input and

long-search modes. The available commands are:

- SegLocator/Training

- SegLocator/1:N Match

- SegLocator/Batch Run

- SegLocator/Training

- SegLocator/1:N Match

The data for this chapter consists of the four images below:

---------

------

------

Figure 16.1 The data consists of 4 images.

Note: Each image contains 9 logos.

There are 9 trademarks randomly placed in the four images. We want the SegLocator not only to recognize the image segment, but also locate the position.

O x

y

Figure 16.2 Segment Specification.

The image segment is specified by 5 numbers:

(x, y, w, h, r)

where:

x is the x-coordinate of the upper left corner;

y is the y-coordinate of the upper left corner;

w is the x-length of the segment (width);

h is the y-length of the segment (height); and

r is the rotation angle.

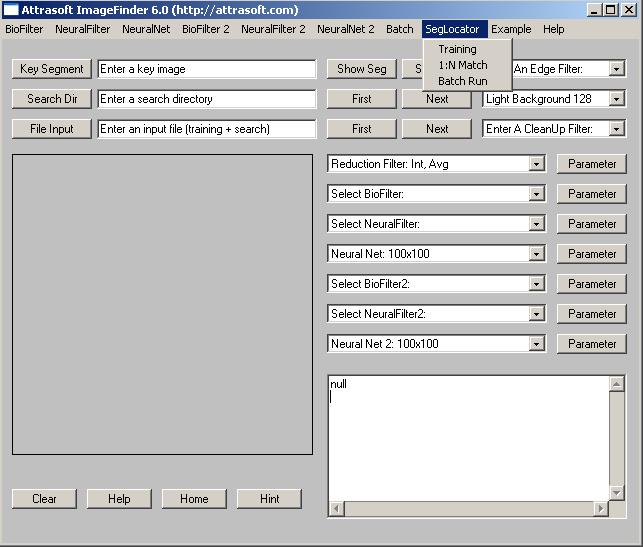

Figure 16.3 SegLocator.

The default Representation is 100x100. The x coordinate runs from 0 to 100 and the y coordinate runs from 0 to 100. (This depends on the NeuralNet filter selected. If you choose a 50x50 NeuralNet Filter instead of 100x100, then the x coordinate runs from 0 to 50 and the y coordinate runs from 0 to 50.) The origin of the coordinate system is at the upper left corner. The x-axis runs from left to right and the y-axis runs from top to bottom.

To find a segment in an image, you can use:

- SegLocator/Training followed by SegLocator/1:N Match; or

- SegLocator/Batch Run.

1. The United Way.

You can duplicate this example by two clicks:

- Click �Example/SegLocator/United Way�;

- Click �SegLocator/Batch Run�.

Input

Key Segment = image002NeuralNet Parameter

Training Segment 98 16 98 81

The segment picked is 98 16 98 81. This is in 300x300 scale because

the ImageFinder display area is 300 x 300 pixels. The output is

100x100 scale; and (98 16 98 81) in 300x300 scale will convert to (33,

5, 33, 27) in 100x100 scale. The output is:

image002.jpg 86976

34 7 26 20 0

image004.jpg 86528

2 4 26 20 0

image006.jpg 84608

63 33 26 20 0

image008.jpg 82368

8 10 26 20 0

In all 4 cases, the �United Way� segments are correctly identified.

The output for the first image is (34 7 26 20) and the original segment is (33, 5, 33, 27). The difference is from the fact that the SegLocator locates the image segment without a blank area, while the original training segment, (33, 5, 33, 27), contains a blank area.

2. Monopoly.

You can duplicate this example by two clicks:

- Click �Example/SegLocator/Monopoly�;

- Click �SegLocator/Batch Run�.

Input

Key Segment = image002NeuralNet Parameter

Training Segment 85 117 110 68

The segment picked, in 300x300 scale, is 85 117 110 68, which translates

to 100x100 scale as: (28, 39, 37, 23). The output is:

image002.jpg 78784

31 42 29 8 0

image004.jpg 78336

35 74 29 8 0

image006.jpg 78080

34 77 29 8 0

image008.jpg 76480

7 77 29 8 0

In all 4 cases, the �Monopoly� segments are correctly identified.

The output for the first image is (31 42 29 8) and the original segment is (28, 39, 37, 23). The difference is from the fact that the SegLocator locates the image segment without a blank area, while the original training segment contains a blank area.

3. Mr. Potato.

You can duplicate this example by two clicks:

- Click �Example/SegLocator/Mr. Potato�;

- Click �SegLocator/Batch Run�.

Input

Key Segment = image002NeuralNet Parameter

Training Segment is 206 17 74 88The segment picked, in 300x300 scale, is 206 17 74 88. The output is:

image002.jpg 79552In all 4 cases, the �Mr. Potato� segments are correctly identified.

73 9 12 20 0

image004.jpg 78080

15 68 12 20 0

image006.jpg 78336

40 41 12 20 0

image008.jpg 75584

48 11 12 20 0

4. AAA.

You can duplicate this example by two clicks:

- Click �Example/SegLocator/Mr. Potato�;

- Click �SegLocator/Batch Run�.

Input

Key Segment = image002NeuralNet Parameter

Training Segment is 109 194 74 75The output is:

image002.jpg 79872In all 4 cases, the �AAA� segments are correctly identified.

37 66 19 14 0

image004.jpg 79296

68 41 19 14 0

image006.jpg 77376

7 12 19 14 0

image008.jpg 76288

39 43 19 14 0

5. Ford.

You can duplicate this example by two clicks:

- Click �Example/SegLocator/ Ford�;

- Click �SegLocator/Batch Run�.

Input

Key Segment = image002NeuralNet Parameter

Training Segment is 195 202 92 60The output is:

image002.jpg 78976

62 70 22 8 0

image004.jpg 78912

66 14 22 8 0

image006.jpg 77888

40 16 22 8 0

image008.jpg 75904

7 47 22 8 0

In all 4 cases, the �Ford� segments are correctly identified.

6. Soup.

You can duplicate this example by two clicks:

- Click �Example/SegLocator/ Soup�;

- Click �SegLocator/Batch Run�.

Input

Key Segment = image002Image Processing

Threshold File = Customized filter:

Red 30 - 127 Light Background

Green: Ignore

Blue: Ignore

NeuralNet Parameter

Training Segment is 18 14 53 69

Blurring = 2

Segment Size = S(Small) Segment

The output is:

image002.jpg 2400000In all 4 cases, the �Soup� segments are correctly identified.

10 7 8 13 0

image004.jpg 48000

50 46 8 13 0

image006.jpg 60000

18 69 8 13 0

image008.jpg 48000

73 20 8 13 0

The output of the SegLocator is within an area of 100x100. Interpretation of the SegLocator�s output depends on:

- Image Size;

- NeuralNet Filter;

- Reduction Filter.

- An image is 256x384;

- Neural Net is 100x100; and

- The Reduction Filter is Integer.

Assume:

- An image is 256x384;

- Neural Net is 100x100; and

- The Reduction Filter is Real.